In the times of COVID-19, when enterprise leaders are facing tremendous pressure to keep their businesses agile and profitable, the dependency on technology trends for business to overcome some of the challenges have increased.

Amidst the strong emphasis on social-distancing to contain the crisis, work-from-home is the new usual, resulting in rising business complexities. From school, yoga classes to grocery shopping, employee onboarding, medical consultation, and client interactions, everything is being conducted virtually. This is not just unprecedented, but also a unique experience for each one of us since not many were ready for such a sudden and abrupt shift.

These new habits, primarily enforced by the pandemic, have created significant losses to the economy and forced old businesses to modernize quickly. There is already a shift in services, and organizations are reinventing their operating models. Efforts are being made to leverage the potential of new-age technologies such as artificial intelligence (AI), digitization, collaboration tools, and risk management to drive growth and innovation.

In light of the above, let’s look at some of the top technological trends that are expected to redefine the IT of the Future.

Digital transformation in business

COVID-19 pandemic has turned into a decisive catalyst for digital transformation. Technology leaders are now reasonably convinced about fast-tracking their digital transformation efforts to navigate the current crisis and stay profitable. Traditional brick-and-mortar businesses also realize the importance of creating a robust virtual presence to beat the odds. The enterprises have no option but to accelerate their digital transformation efforts to adjust to the new normal.

Enterprise technology leaders firmly believe that the current crisis has given a growing sense of belief and visibility to organizations on the best ways to tackle any future disruptions. (See: Chandresh Dedhia, Head of Information Technology, Ascent Health)

One of the biggest challenges that many enterprises are still facing is to drive the mindset shift of their employees. The next six to twelve months will witness a strong effort from enterprises of all scales to adopt technology advancements, change their organizational structures, and inculcate new dynamics of virtual behaviors within their larger teams. Learning resources and tools which can help in upskilling and reskilling will be in demand.

Updating business continuity plans

Covid-19 is proving to be the litmus test for many organizations to stay resilient and operate without any disruption. The disruption caused by the pandemic was a nightmare for many enterprises as they were not well-equipped to manage an upheaval of such magnitude. In the months ahead, organizations will be seen implementing and integrating new and advanced technologies in their Business Continuity Plans (BCP). Modernization of applications and tools to check employee health, emergency response, and data backup functionalities will be strengthened and restructured.

The focus will be on deploying technology solutions that not only drive remote working but also help reduce operating expenses and increase business resiliency.

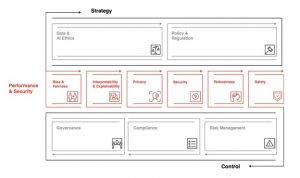

Application of AI in business

There is a growing organizational interest to adopt artificial intelligence (AI) technology to accelerate growth, innovate, and disrupt the market. The next couple of years will see enterprising testing and deploying several AI-capabilities to predict human behavior and fortify their market share.

A recent study commissioned by global consulting major EY and trade association body Nasscom says that 60% of Indian executive leaders believe that AI will disrupt their businesses within three years. (See: Enterprises jump on the AI bandwagon but seat belts are few and Covid-19 lessons for accelerating AI usage).

Once the offices resume their physical centers, tech-leaders will strongly rely upon AI-based intelligent data processing and contactless technologies to ensure their employee maintain social distancing.

Implementing Chatbots to address customer grievances will be accelerated. The banking sector, for instance, has already taken aggressive steps to deploy innovative AI-based chatbots and tools to provide 24*7 customer support to their customers. (See: ICICI Prudential extends coverage of conversational AI Ligo and AI in banking now geared for a takeoff)

AI will specifically drive colossal traction for the industries which operate in the retail and supply chain. Since a majority of the consumers will continue to shop online for an indefinite time, AI-driven technologies will enable businesses to identify consumer purchasing patterns, launch new products, and provide an exceptional experience to their customers.

Stay tuned at Better World for the second part of the enterprise technology trends series, which will focus on technologies such as Cloud Computing, Blockchain, and Cyber Security.

0 Comments