Humans have built very complex robotic systems, such as convoys and airplanes, and even neural networks to communicate with each other, but we’re only starting to scratch the surface of what artificial intelligence (AI) can do. It’s also about time we started paying more attention to ‘responsible AI.’

A future with artificial intelligence would be very mixed. It would be an actuality that could not only eliminate many of today’s human jobs, but also allow us to solve complex problems much faster than we could if we used a human brain to solve those same complex problems.

As technology gets closer to achieving full intelligence, we will start seeing the artificial intelligence (AI) systems that are fully self-aware and can think, reason, and act like a human would. This may raise some concerns, because some people fear that as artificially intelligent computers become more advanced, they might start to have a good enough IQ to be more intelligent than humans. The concern is not if, but when, it might happen.

In future we will have artificial intelligent robotic ‘teams’ of robots that can do all the menial tasks which we traditionally assign to humans such as vacuuming, picking up items, cooking, shopping and more. All jobs will eventually be done by artificially intelligent robotic machines. Even without this new development, all work will still be based on traditional methods such as task assignment, task resolution, and reward and punishment systems.

Today, we are beginning to see the first AI machine prototypes at work and many exciting projects are in the works. One such project is a robotic dog, which can recognize objects, humans and other dogs. Other projects include self-driving cars, self-piloted planes, artificial intelligent robots, and new weather systems.

The future of artificially intelligent robotic androids is exciting but also scary due to the autonomous capabilities of these machines. These robotic androids may be made up of two different types of artificial intelligence, a human-like non-conscious neural network (NCL) and a fully conscious human mind with all its own memory, thoughts, and feelings. Some NCL robots may have both systems in one system or may only have one. Many experts believe a full AI will be closer to human intelligence than any current technology can ever make.

Such concerns and apprehensions around AI have triggered the need for AI developments and implementations to be humanly, ethically, and legally more responsible.

Microsoft recognizes six principles that it believes should guide AI development and use (see link). These are fairness; reliability and safety; privacy and security; inclusiveness, transparency; and accountability.

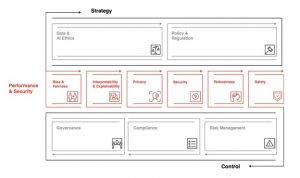

PwC has created a ‘Responsible AI Toolkit,’ which is a suite of customizable frameworks, tools, and processes designed to help organizations “harness the power of AI in an ethical and responsible manner, from strategy through execution.”

PwC has created a ‘Responsible AI Toolkit,’ which is a suite of customizable frameworks, tools, and processes designed to help organizations “harness the power of AI in an ethical and responsible manner, from strategy through execution.”

The field of ‘Responsible AI’ is generating more and more interest from various stakeholders, including governments, developers, human-resource experts, and user organizations, among others.

0 Comments