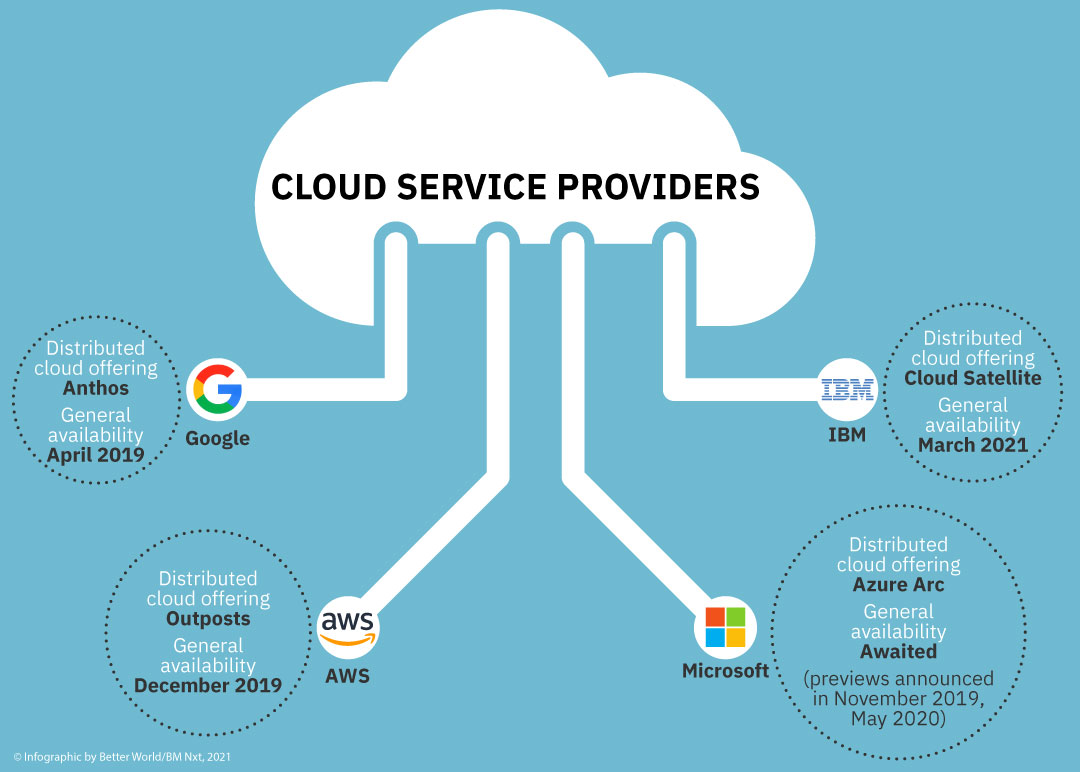

A titanic struggle for control of the cloud has begun in earnest by the emergence of various distributed cloud architectures. The shift is being driven by the need for enterprises to move away from traditional infrastructure-aspect-management to ‘utility cloud’ models, which can be far more sustainable as long-term strategies.

Amazon Web Services, IBM, Google, and Microsoft are the giants whose bet in the development of such virtualization technologies has won them large shares of the cloud market. Several other companies are also active in this arena, and a closer examination of the main players may reveal a number of smaller players too.

Multiple drivers are fueling growth

The star attractions of distributed clouds include (1) low latency due to proximity to user organizations (e.g., on-premises delivery or edge delivery); (2) better adherence to compliance and data-residency requirements; and (3) rapidly growing number of IoT devices, utility drones, etc.

With distributed cloud services, the service providers are moving closer to the users. These cloud services are offered not just as public-cloud-hosted solutions but also on the edge or the on-premise data center. This approach of having a SaaS model with an on-premise application has its own advantages like ease of provisioning new services, ease of management, and cost reductions in the form of greater operational efficiency brought about by streamlined infrastructure management.

Cloud service providers have a deep understanding of both the needs of enterprises and their unique business requirements. They use their expertise to develop solutions that meet these objectives. They are also well known for providing easy accessibility to their services from the internet. This enables fast and convenient access for end-users.

Enterprises may think that by switching over to a distributed cloud computing service they will lose control of their data. However, the cloud service providers enable excellent security and monitoring solutions. They also ensure that users are given the highest level of access to their data. By migrating on-premises software to a cloud service provider, enterprises do not stand to lose the expertise that their employees have built up during their time in the organization.

Google Anthos: A first-mover advantage

Google formally introduced Anthos, as an open platform that lets enterprises run an app anywhere—simply, flexibly, and securely. In a blog post, dated 9 April 2019, Google noted that, embracing open standards, Anthos let enterprises run applications, unmodified, on existing on-prem hardware investments or in the public cloud, and was based on the Cloud Services Platform announced earlier.

The announcement said that Anthos’ hybrid functionality was made generally available both on Google Cloud Platform (GCP) with Google Kubernetes Engine (GKE), and in the enterprise data center with GKE On-Prem.

Consistency, another post said, was the greatest common denominator, with Anthos making multi-cloud easy owing to its foundation of Kubernetes—specifically the Kubernetes-style API. “Using the latest upstream version as a starting point, Anthos can see, orchestrate and manage any workload that talks to the Kubernetes API—the lingua franca of modern application development, and an interface that supports more and more traditional workloads,” the blog post added.

AWS Outposts: Defending its cloud turf

Amazon Web Services (AWS) has been among the first movers. On 3 December 2019, the cloud services major announced the general availability of AWS Outposts, as fully managed and configurable compute and storage racks built with AWS-designed hardware that allow customers to run compute and storage on-premises, while seamlessly connecting to AWS’s broad array of services in the cloud. A pre-announcement for Outposts had come on 28 November 2018 at the re:Invent 2018.

“When we started thinking about offering a truly consistent hybrid experience, what we heard is that customers really wanted it to be the same—the same APIs, the same control plane, the same tools, the same hardware, and the same functionality. It turns out this is hard to do, and that’s the reason why existing options for on-premises solutions haven’t gotten much traction today,” said Matt Garman, Vice President, Compute Services, at AWS. “With AWS Outposts, customers can enjoy a truly consistent cloud environment using the native AWS services or VMware Cloud on AWS to operate a single enterprise IT environment across their on-premises locations and the cloud.”

IBM Cloud Satellite: Late but not left out

IBM has been a bit late to the distributed cloud party. It was only on 1 March 2021 that IBM announced that hybrid cloud services were now generally available in any environment—on any cloud, on premises or at the edge—via IBM Cloud Satellite. The partnership with Lumen Technologies, coupled with IBM’s long-standing deep presence in on-premise enterprise systems, could turn out to be a key differentiator. An IBM press release noted that Lumen Technologies and IBM have integrated IBM Cloud Satellite with the Lumen edge platform to enable clients to harness hybrid cloud services in near real-time and build innovative solutions at the edge.

“IBM is working with clients to leverage advanced technologies like edge computing and AI, enabling them to digitally transform with hybrid cloud while keeping data security at the forefront,” said Howard Boville, Head of IBM Hybrid Cloud Platform. “With IBM Cloud Satellite, clients can securely gain the benefits of cloud services anywhere, from the core of the data center to the farthest reaches of the network.”

“With the Lumen platform’s broad reach, we are giving our enterprise customers access to IBM Cloud Satellite to help them drive innovation more rapidly at the edge,” said Paul Savill, SVP Enterprise Product Management and Services at Lumen. “Our enterprise customers can now extend IBM Cloud services across Lumen’s robust global network, enabling them to deploy data-heavy edge applications that demand high security and ultra-low latency. By bringing secure and open hybrid cloud capabilities to the edge, our customers can propel their businesses forward and take advantage of the emerging applications of the 4th Industrial Revolution.”

Microsoft Azure Arc: General availability awaited

Julia White Corporate Vice President, Microsoft Azure, in a blog post, dated 4 November 2019, announced Azure Arc, as a set of technologies that unlocks new hybrid scenarios for customers by bringing Azure services and management to any infrastructure. “Azure Arc is available in preview starting today,” she said.

However, the general availability of Azure Arc was not to be announced anytime soon. Six months after the ‘preview’ announcement, Jeremy Winter Partner Director, Azure Management, published a blog post on 20 May 2020, noting that the company was delivering ‘Azure Arc enabled Kubernetes’ in preview to its customers. “With this, anyone can use Azure Arc to connect and configure any Kubernetes cluster across customer datacenters, edge locations, and multi-cloud,” he said.

“In addition, we are also announcing our first set of Azure Arc integration partners, including Red Hat OpenShift, Canonical Kubernetes, and Rancher Labs to ensure Azure Arc works great for all the key platforms our customers are using today,” the post added.

The announcement followed Azure Stack launch two years earlier, to enable a consistent cloud model, deployable on-premises. Meanwhile, Azure was extended to provide DevOps for any environment and any cloud. Microsoft also enabled cloud-powered security threat protection for any infrastructure, and unlocked the ability to run Microsoft Azure Cognitive Services AI models anywhere. Azure Arc was a significant leap forward to enable customers to move from just hybrid cloud to truly deliver innovation anywhere with Azure, the post added.

Looking ahead

A distributed cloud presents an incredible opportunity for businesses that are looking to improve their bottom line while also increasing their agility and versatility.

A distributed cloud is essentially a distributed version of public cloud computing which offers the capability to manage nearly everything from a single computer to thousands of computers. The cloud promises the benefits of a global network without having to worry about hardware, software, management, and monitoring issues. The distributed cloud goes a step further and also brings the assurance on fronts such as latency, compliance, and on-premise application modernization.

0 Comments